Evolutionary history of plants

-shaped scar. The spores are about 30-35 μm across

-shaped scar. The spores are about 30-35 μm acrossThe evolution of plants has resulted in increasing levels of complexity, from the earliest algal mats, through bryophytes, lycopods, ferns to the complex gymnosperms and angiosperms of today. While the groups which appeared earlier continue to thrive, especially in the environments in which they evolved, each new grade of organisation has eventually become more "successful" than its predecessors by most measures.

Evidence suggests that an algal scum formed on the land 1,200 million years ago, but it was not until the Ordovician period, around 450 million years ago, that land plants appeared[1]. These began to diversify in the late Silurian period, around 420 million years ago, and the fruits of their diversification are displayed in remarkable detail in an early Devonian fossil assemblage known as the Rhynie chert. This chert preserved early plants in cellular detail, petrified in volcanic springs. By the middle of the Devonian period most of the features recognised in plants today are present, including roots, leaves and secondary wood, and by late Devonian times seeds had evolved.[2] Late Devonian plants had thereby reached a degree of sophistication that allowed them to form forests of tall trees. Evolutionary innovation continued after the Devonian period. Most plant groups were relatively unscathed by the Permo-Triassic extinction event, although the structures of communities changed. This may have set the scene for the evolution of flowering plants in the Triassic (~200 million years ago), which exploded in the Cretaceous and Tertiary. The latest major group of plants to evolve were the grasses, which became important in the mid Tertiary, from around 40 million years ago. The grasses, as well as many other groups, evolved new mechanisms of metabolism to survive the low CO2 and warm, dry conditions of the tropics over the last 10 million years.

Contents |

Colonisation of land

Land plants evolved from chlorophyte algae, perhaps as early as 510 million years ago;[3] their closest living relatives are the charophytes, specifically Charales. Assuming that the Charales' habit has changed little since the divergence of lineages, this means that the land plants evolved from a branched, filamentous, haplontic alga, dwelling in shallow fresh water,[4] perhaps at the edge of seasonally desiccating pools.[3] Co-operative interactions with fungi may have helped early plants adapt to the stresses of the terrestrial realm.[5]

Plants were not the first photosynthesisers on land, though: consideration of weathering rates suggests that organisms were already living on the land 1,200 million years ago,[3] and microbial fossils have been found in freshwater lake deposits from 1,000 million years ago,[6] but the carbon isotope record suggests that they were too scarce to impact the atmospheric composition until around 850 million years ago.[7] These organisms were probably small and simple, forming little more than an "algal scum".[3]

The first evidence of plants on land comes from spore tetrads [8] attributed to land plants from the Mid-Ordovician early Llanvirn, ~470 million years ago).[9] Spore tetrads consist of four identical, connected spores, produced when a single cell undergoes meiosis. Spore tetrads are borne by all land plants, and some algae.[8] The microstructure of the earliest spores resembles that of modern liverwort spores, suggesting they share an equivalent grade of organisation.[10] It could be that atmospheric 'poisoning' prevented eukaryotes from colonising the land prior to this,[11] or it could simply have taken a great time for the necessary complexity to evolve.[12]

Trilete spores, the progeny of spore tetrads, appear soon afterwards, in the Late Ordovician.[13] Depending exactly when the tetrad splits, each of the four spores may bear a "trilete mark", a  -shape, reflecting the points at which each cell was squashed up against its neighbours.[8] However, in order for this to happen, the spore walls must be sturdy and resistant at an early stage. This resistance is closely associated with having a desiccation-resistant outer wall – a trait only of use when spores have to survive out of water. Indeed, even those embryophytes that have returned to the water lack a resistant wall, thus don't bear trilete marks.[8] A close examination of algal spores shows that none have trilete spores, either because their walls are not resistant enough, or in those rare cases where it is, the spores disperse before they are squashed enough to develop the mark, or don't fit into a tetrahedral tetrad.[8]

-shape, reflecting the points at which each cell was squashed up against its neighbours.[8] However, in order for this to happen, the spore walls must be sturdy and resistant at an early stage. This resistance is closely associated with having a desiccation-resistant outer wall – a trait only of use when spores have to survive out of water. Indeed, even those embryophytes that have returned to the water lack a resistant wall, thus don't bear trilete marks.[8] A close examination of algal spores shows that none have trilete spores, either because their walls are not resistant enough, or in those rare cases where it is, the spores disperse before they are squashed enough to develop the mark, or don't fit into a tetrahedral tetrad.[8]

The earliest megafossils of land plants were thalloid organisms, which dwelt in fluvial wetlands and are found to have covered most of an early Silurian flood plain. They could only survive when the land was waterlogged.[14]

Once plants had reached the land, there were two approaches to desiccation. The bryophytes avoid it or give in to it, restricting their ranges to moist settings, or drying out and putting their metabolism "on hold" until more water arrives. Tracheophytes resist desiccation. They all bear a waterproof outer cuticle layer wherever they are exposed to air (as do some bryophytes), to reduce water loss – but since a total covering would cut them off from CO2 in the atmosphere, they rapidly evolved stomata – small openings to allow gas exchange. Tracheophytes also developed vascular tissue to aid in the movement of water within the organisms (see below), and moved away from a gametophyte dominated life cycle (see below).

The establishment of a land-based flora permitted the accumulation of oxygen in the atmosphere as never before, as the new hordes of land plants pumped it out as a waste product. When this concentration rose above 13%, it permitted the possibility of wildfire. This is first recorded in the early Silurian fossil record by charcoalified plant fossils.[15] Apart from a controversial gap in the Late Devonian, charcoal is present ever since.

Charcoalification is an important taphonomic mode. Wildfire drives off the volatile compounds, leaving only a shell of pure carbon. This is not a viable food source for herbivores or detritovores, so is prone to preservation; it is also robust, so can withstand pressure and display exquisite, sometimes sub-cellular, detail.

Changing life cycles

All multicellular plants have a life cycle comprising two phases (often confusingly referred to as "generations").[16] One is termed the gametophyte, has a single set of chromosomes (denoted 1n), and produces gametes (sperm and eggs). The other is termed the sporophyte, has paired chromosomes (denoted 2n), and produces spores. The two phases may be identical, or phenomenally different.

The overwhelming pattern in plant evolution is for a reduction of the gametophytic phase, and the increase in sporophyte dominance. The algal ancestors to land plants were almost certainly haplobiontic, being haploid for all their life cycles, with a unicellular zygote providing the 2n stage. All land plants (i.e. embryophytes) are diplobiontic – that is, both the haploid and diploid stages are multicellular.[16]

There are two competing theories to explain the appearance of a diplobiontic lifecycle.

The interpolation theory (also known as the antithetic or intercalary theory)[17] holds that the sporophyte phase was a fundamentally new invention, caused by the mitotic division of a freshly germinated zygote, continuing until meiosis produces spores. This theory implies that the first sporophytes would bear a very different morphology to the gametophyte, on which they would have been dependent.[17] This seems to fit well with what we know of the bryophytes, in which a vegetative thalloid gametophyte is parasitised by simple sporophytes, which often comprise no more than a sporangium on a stalk. Increasing complexity of the ancestrally simple sporophyte, including the eventual acquisition of photosynthetic cells, would free it from its dependence on a gametophyte, as we see in some hornworts (Anthoceros), and eventually result in the sporophyte developing organs and vascular tissue, and becoming the dominant phase, as in the tracheophytes (vascular plants).[16] This theory may be supported by observations that smaller Cooksonia individuals must have been supported by a gametophyte generation. The observed appearance of larger axial sizes, with room for photosynthetic tissue and thus self-sustainability, provides a possible route for the development of a self-sufficient sporophyte phase.[17]

The alternative hypothesis is termed the transformation theory (or homologous theory). This posits that the sporophyte appeared suddenly by a delay in the occurrence of meiosis after the zygote germinated. Since the same genetic material would be employed, the haploid and diploid phases would look the same. This explains the behaviour of some algae, which produce alternating phases of identical sporophytes and gametophytes. Subsequent adaption to the desiccating land environment, which makes sexual reproduction difficult, would result in the simplification of the sexually active gametophyte, and elaboration of the sporophyte phase to better disperse the waterproof spores.[16] The tissue of sporophytes and gametophytes preserved in the Rhynie chert is of similar complexity, which is taken to support this hypothesis.[17]

Water transport

In order to photosynthesise, plants must uptake CO2 from the atmosphere. However, this comes at a price: while stomata are open to allow CO2 to enter, water can evaporate.[18] Water is lost much faster than CO2 is absorbed, so plants need to replace it, and have developed systems to transport water from the moist soil to the site of photosynthesis.[18] Early plants sucked water between the walls of their cells, then evolved the ability to control water loss (and CO2 acquisition) through the use of stomata. Specialised water transport tissues soon evolved in the form of hydroids, tracheids, then secondary xylem, followed by an endodermis and ultimately vessels.[18]

The high CO2 levels of the Silu-Devonian, when early plants were colonising land, meant that the need for water was relatively low in the early days. As CO2 was withdrawn from the atmosphere by plants, more water was lost in its capture, and more elegant transport mechanisms evolved.[18] As water transport mechanisms, and waterproof cuticles, evolved, plants could survive without being continually covered by a film of water. This transition from poikilohydry to homoiohydry opened up new potential for colonisation.[18] Plants were then faced with a balance, between transporting water as efficiently as possible and preventing transporting vessels to implode and cavitate.

During the Silurian, CO2 was readily available, so little water needed expending to acquire it. By the end of the Carboniferous, when CO2 levels had lowered to something approaching today's, around 17 times more water was lost per unit of CO2 uptake.[18] However, even in these "easy" early days, water was at a premium, and had to be transported to parts of the plant from the wet soil to avoid desiccation. This early water transport took advantage of the cohesion-tension mechanism inherent in water. Water has a tendency to diffuse to areas that are drier, and this process is accelerated when water can be wicked along a fabric with small spaces. In small passages, such as that between the plant cell walls (or in tracheids), a column of water behaves like rubber – when molecules evaporate from one end, they literally pull the molecules behind them along the channels. Therefore transpiration alone provided the driving force for water transport in early plants.[18] However, without dedicated transport vessels, the cohesion-tension mechanism cannot transport water more than about 2 cm, severely limiting the size of the earliest plants.[18] This process demands a steady supply of water from one end, to maintain the chains; to avoid exhausing it, plants developed a waterproof cuticle. Early cuticle may not have had pores but did not cover the entire plant surface, so that gas exchange could continue.[18] However, dehydration at times was inevitable; early plants cope with this by having a lot of water stored between their cell walls, and when it comes to it sticking out the tough times by putting life "on hold" until more water is supplied.[18]

In order to be free from the constraints of small size and constant moisture that the parenchymatic transport system inflicted, plants needed a more efficient water transport system. During the early Silurian, they developed specialized cells, which were lignified (or bore similar chemical compounds)[18] to avoid implosion; this process coincided with cell death, allowing their innards to be emptied and water to be passed through them.[18] These wider, dead, empty cells were a million times more conductive than the inter-cell method, giving the potential for transport over longer distances, and higher CO2 diffusion rates.

The first macrofossils to bear water-transport tubes in situ are the early Devonian pretracheophytes Aglaophyton and Horneophyton, which have structures very similar to the hydroids of modern mosses. Plants continued to innovate new ways of reducing the resistance to flow within their cells, thereby increasing the efficiency of their water transport. Bands on the walls of tubes, in fact apparent from the early Silurian onwards,[19] are an early improvisation to aid the easy flow of water.[20] Banded tubes, as well as tubes with pitted ornamentation on their walls, were lignified[21] and, when they form single celled conduits, are considered to be tracheids. These, the "next generation" of transport cell design, have a more rigid structure than hydroids, allowing them to cope with higher levels of water pressure.[18] Tracheids may have a single evolutionary origin, possibly within the hornworts,[22] uniting all tracheophytes (but they may have evolved more than once).[18]

Water transport requires regulation, and dynamic control is provided by stomata.[23] By adjusting the amount of gas exchange, they can restrict the amount of water lost through transpiration. This is an important role where water supply is not constant, and indeed stomata appear to have evolved before tracheids, being present in the non-vascular hornworts.[18]

An endodermis probably evolved during the Silu-Devonian, but the first fossil evidence for such a structure is Carboniferous.[18] This structure in the roots covers the water transport tissue and regulates ion exchange (and prevents unwanted pathogens etc. from entering the water transport system). The endodermis can also provide an upwards pressure, forcing water out of the roots when transpiration is not enough of a driver.

Once plants had evolved this level of controlled water transport, they were truly homoiohydric, able to extract water from their environment through root-like organs rather than relying on a film of surface moisture, enabling them to grow to much greater size.[18] As a result of their independence from their surroundings, they lost their ability to survive desiccation – a costly trait to retain.[18]

During the Devonian, maximum xylem diameter increased with time, with the minimum diameter remaining pretty constant.[20] By the middle Devonian, the tracheid diameter of some plant lineages[24] had plateaued.[20] Wider tracheids allow water to be transported faster, but the overall transport rate depends also on the overall cross-sectional area of the xylem bundle itself.[20] The increase in vascular bundle thickness further seems to correlate with the width of plant axes, and plant height; it is also closely related to the appearance of leaves[20] and increased stomatal density, both of which would increase the demand for water.[18]

While wider tracheids with robust walls make it possible to achieve higher water transport pressures, this increases the problem of cavitation.[18] Cavitation occurs when a bubble of air forms within a vessel, breaking the bonds between chains of water molecules and preventing them from pulling more water up with their cohesive tension. A tracheid, once cavitated, cannot have its embolism removed and return to service (except in a few advanced angiosperms which have developed a mechanism of doing so). Therefore it is well worth plants' while to avoid cavitation occurring. For this reason, pits in tracheid walls have very small diameters, to prevent air entering and allowing bubbles to nucleate.[18] Freeze-thaw cycles are a major cause of cavitation.[18] Damage to a tracheid's wall almost inevitably leads to air leaking in and cavitation, hence the importance of many tracheids working in parallel.[18]

Cavitation is hard to avoid, but once it has occurred plants have a range of mechanisms to contain the damage.[18] Small pits link adjacent conduits to allow fluid to flow between them, but not air – although ironically these pits, which prevent the spread of embolisms, are also a major cause of them.[18] These pitted surfaces further reduce the flow of water through the xylem by as much as 30%.[18] Conifers, by the Jurassic, developed an ingenious improvement, using valve-like structures to isolate cavitated elements. These torus-margo structures have a blob floating in the middle of a donut; when one side depressurises the blob is sucked into the torus and blocks further flow.[18] Other plants simply accept cavitation; for instance, oaks grow a ring of wide vessels at the start of each spring, none of which survive the winter frosts. Maples use root pressure each spring to force sap upwards from the roots, squeezing out any air bubbles.

Growing to height also employed another trait of tracheids – the support offered by their lignified walls. Defunct tracheids were retained to form a strong, woody stem, produced in most instances by a secondary xylem. However, in early plants, tracheids were too mechanically vulnerable, and retained a central position, with a layer of tough sclerenchyma on the outer rim of the stems.[18] Even when tracheids do take a structural role, they are supported by sclerenchymatic tissue.

Tracheids end with walls, which impose a great deal of resistance on flow;[20] vessel members have perforated end walls, and are arranged in series to operate as if they were one continuous vessel.[20] The function of end walls, which were the default state in the Devonian, was probably to avoid embolisms. An embolism is where an air bubble is created in a tracheid. This may happen as a result of freezing, or by gases dissolving out of solution. Once an embolism is formed, it usually cannot be removed (but see later); the affected cell cannot pull water up, and is rendered useless.

End walls excluded, the tracheids of prevascular plants were able to operate under the same hydraulic conductivity as those of the first vascular plant, Cooksonia.[20]

The size of tracheids is limited as they comprise a single cell; this limits their length, which in turn limits their maximum useful diameter to 80 μm.[18] Conductivity grows with the fourth power of diameter, so increased diameter has huge rewards; vessel elements, consisting of a number of cells, joined at their ends, overcame this limit and allowed larger tubes to form, reaching diameters of up to 500 μm, and lengths of up to 10 m.[18]

Vessels first evolved during the dry, low CO2 periods of the late Permian, in the horsetails, ferns and Selaginellales independently, and later appeared in the mid Cretaceous in angiosperms and gnetophytes.[18] Vessels allow the same cross-sectional area of wood to transport around a hundred times more water than tracheids![18] This allowed plants to fill more of their stems with structural fibres, and also opened a new niche to vines, which could transport water without being as thick as the tree they grew on.[18] Despite these advantages, tracheid-based wood is a lot lighter, thus cheaper to make, as vessels need to be much more reinforced to avoid cavitation.[18]

Evolution of leaves

Leaves today are, in almost all instances, an adaptation to increase the amount of sunlight that can be captured for photosynthesis. Leaves certainly evolved more than once, and probably originated as spiny outgrowths to protect early plants from herbivory.

The rhyniophytes of the Rhynie chert comprised nothing more than slender, unornamented axes. The early to middle Devonian trimerophytes, therefore, are the first evidence we have of anything that could be considered leafy. This group of vascular plants are recognisable by their masses of terminal sporangia, which adorn the ends of axes which may bifurcate or trifurcate.[23] Some organisms, such as Psilophyton, bore enations. These are small, spiny outgrowths of the stem, lacking their own vascular supply.

Around the same time, the zosterophyllophytes were becoming important. This group is recognisable by their kidney-shaped sporangia, which grew on short lateral branches close to the main axes. They sometimes branched in a distinctive H-shape.[23] The majority of this group bore pronounced spines on their axes. However, none of these had a vascular trace, and the first evidence of vascularised enations occurs in the Rhynie genus Asteroxylon. The spines of Asteroxylon had a primitive vasuclar supply – at the very least, leaf traces could be seen departing from the central protostele towards each individual "leaf". A fossil known as Baragwanathia appears in the fossil record slightly earlier, in the late Silurian.[25] In this organism, these leaf traces continue into the leaf to form their mid-vein.[26] One theory, the "enation theory", holds that the leaves developed by outgrowths of the protostele connecting with existing enations, but it is also possible that microphylls evolved by a branching axis forming "webbing".[23]

Asteroxylon[27] and Baragwanathia are widely regarded as primitive lycopods.[23] The lycopods are still extant today, familiar as the quillwort Isoetes and the club mosses. Lycopods bear distinctive microphylls – leaves with a single vascular trace. Microphylls could grow to some size – the Lepidodendrales boasted microphylls over a meter in length – but almost all just bear the one vascular bundle. (An exception is the branching Selaginella).

The more familiar leaves, megaphylls, are thought to have separate origins – indeed, they appeared four times independently, in the ferns, horsetails, progymnosperms, and seed plants.[28] They appear to have originated from dichotomising branches, which first overlapped (or "overtopped") one another, and eventually developed "webbing" and evolved into gradually more leaf-like structures.[26] So megaphylls, by this "teleome theory", are composed of a group of webbed branches[26] – hence the "leaf gap" left where the leaf's vascular bundle leaves that of the main branch resembles two axes splitting.[26] In each of the four groups to evolve megaphylls, their leaves first evolved during the late Devonian to early Carboniferous, diversifying rapidly until the designs settled down in the mid Carboniferous.[28]

The cessation of further diversification can be attributed to developmental constraints,[28] but why did it take so long for leaves to evolve in the first place? Plants had been on the land for at least 50 million years before megaphylls became significant. However, small, rare mesophylls are known from the early Devonian genus Eophyllophyton – so development could not have been a barrier to their appearance.[29] The best explanation so far incorporates observations that atmospheric CO2 was declining rapidly during this time – falling by around 90% during the Devonian.[30] This corresponded with an increase in stomatal density by 100 times. Stomata allow water to evaporate from leaves, which causes them to curve. It appears that the low stomatal density in the early Devonian meant that evaporation was limited, and leaves would overheat if they grew to any size. The stomatal density could not increase, as the primitive steles and limited root systems would not be able to supply water quickly enough to match the rate of transpiration.[31]

Clearly, leaves are not always beneficial, as illustrated by the frequent occurrence of secondary loss of leaves, famously exemplified by cacti and the "whisk fern" Psilotum.

Secondary evolution can also disguise the true evolutionary origin of some leaves. Some genera of ferns display complex leaves which are attached to the pseudostele by an outgrowth of the vascular bundle, leaving no leaf gap.[26] Further, horsetail (Equisetum) leaves bear only a single vein, and appear for all the world to be microphyllous; however, in the light of the fossil record and molecular evidence, we conclude that their forbears bore leaves with complex venation, and the current state is a result of secondary simplification.[32]

Deciduous trees deal with another disadvantage to having leaves. The popular belief that plants shed their leaves when the days get too short is misguided; evergreens prospered in the Arctic circle during the most recent greenhouse earth.[33] The generally accepted reason for shedding leaves during winter is to cope with the weather – the force of wind and weight of snow are much more comfortably weathered without leaves to increase surface area. Seasonal leaf loss has evolved independently several times and is exhibited in the ginkgoales, pinophyta and angiosperms.[34] Leaf loss may also have arisen as a response to pressure from insects; it may have been less costly to lose leaves entirely during the winter or dry season than to continue investing resources in their repair.[35]

Evolution of trees

The early Devonian landscape was devoid of vegetation taller than waist height. Without the evolution of a robust vascular system, taller heights could not be attained. There was, however, a constant evolutionary pressure to attain greater height. The most obvious advantage is the harvesting of more sunlight for photosynthesis – by overshadowing competitors – but a further advantage is present in spore distribution, as spores (and, later, seeds) can be blown greater distances if they start higher. This may be demonstrated by Prototaxites, thought to be a late Silurian fungus reaching eight metres in height.[36]

In order to attain arborescence, early plants needed to develop woody tissue that would act as both support and water transport. To understand wood, we must know a little of vascular behaviour. The stele of plants undergoing "secondary growth" is surrounded by the vascular cambium, a ring of cells which produces more xylem (on the inside) and phloem (on the outside). Since xylem cells comprise dead, lignified tissue, subsequent rings of xylem are added to those already present, forming wood.

The first plants to develop this secondary growth, and a woody habit, were apparently the ferns, and as early as the middle Devonian one species, Wattieza, had already reached heights of 8 m and a tree-like habit.[37]

Other clades did not take long to develop a tree-like stature; the late Devonian Archaeopteris, a precursor to gymnosperms which evolved from the trimerophytes,[38] reached 30 m in height. These progymnosperms were the first plants to develop true wood, grown from a bifacial cambium, of which the first appearance is in the mid Devonian Rellimia.[39] True wood is only thought to have evolved once, giving rise to the concept of a "lignophyte" clade.

These Archaeopteris forests were soon supplemented by lycopods, in the form of lepidodendrales, which topped 50m in height and 2m across at the base. These lycopods rose to dominate late Devonian and Carboniferous coal deposits.[40] Lepidodendrales differ from modern trees in exhibiting determinate growth: after building up a reserve of nutrients at a low height, the plants would "bolt" to a genetically determined height, branch at that level, spread their spores and die.[41] They consisted of "cheap" wood to allow their rapid growth, with at least half of their stems comprising a pith-filled cavity.[23] Their wood was also generated by a unifacial vascular cambium – it did not produce new phloem, meaning that the trunks could not grow wider over time.

The horsetail Calamites was next on the scene, appearing in the Carboniferous. Unlike the modern horsetail Equisetum, Calamites had a unifacial vascular cambium, allowing them to develop wood and grow to heights in excess of 10 m. They also branched multiple times.

While the form of early trees was similar to that of today's, the groups containing all modern trees had yet to evolve.

The dominant groups today are the gymnosperms, which include the coniferous trees, and the angiosperms, which contain all fruiting and flowering trees. It was long thought that the angiosperms arose from within the gymnosperms, but recent molecular evidence suggests that their living representatives form two distinct groups.[42][43][44] It must be noted that the molecular data has yet to be fully reconciled with morphological data,[45][46][47] but it is becoming accepted that the morphological support for paraphyly is not especially strong.[48] This would lead to the conclusion that both groups arose from within the pteridosperms, probably as early as the Permian.[48]

The angiosperms and their ancestors played a very small role until they diversified during the Cretaceous. They started out as small, damp-loving organisms in the understory, and have been diversifying ever since the mid-Cretaceous, to become the dominant member of non-boreal forests today.

Evolution of roots

|

|

| The roots (bottom image) of lepidodendrales are thought to be functionally equivalent to the stems (top), as the similar appearance of "leaf scars" and "root scars" on these specimens from different species demonstrates. |

Roots are important to plants for two main reasons: Firstly, they provide anchorage to the substrate; more importantly, they provide a source of water and nutrients from the soil. Roots allowed plants to grow taller and faster.

The onset of roots also had effects on a global scale. By disturbing the soil, and promoting its acidification (by taking up nutrients such as nitrate and phosphate), they enabled it to weather more deeply, promoting the draw-down of CO2[49] with huge implications for climate.[50] These effects may have been so profound they led to a mass extinction.[51]

But how and when did roots evolve in the first place? While there are traces of root-like impressions in fossil soils in the late Silurian,[52] body fossils show the earliest plants to be devoid of roots. Many had tendrils which sprawled along or beneath the ground, with upright axes or thalli dotted here and there, and some even had non-photosynthetic subterranean branches which lacked stomata. The distinction between root and specialised branch is developmental; true roots follow a different developmental trajectory to stems. Further, roots differ in their branching pattern, and in possession of a root cap.[3] So while Silu-Devonian plants such as Rhynia and Horneophyton possessed the physiological equivalent of roots, roots – defined as organs differentiated from stems – did not arrive until later.[3] Unfortunately, roots are rarely preserved in the fossil record, and our understanding of their evolutionary origin is sparse.[3]

Rhizoids – small structures performing the same role as roots, usually a cell in diameter – probably evolved very early, perhaps even before plants colonised the land; they are recognised in the Characeae, an algal sister group to land plants.[3] That said, rhizoids probably evolved more than once; the rhizines of lichens, for example, perform a similar role. Even some animals (Lamellibrachia) have root-like structures![3]

More advanced structures are common in the Rhynie chert, and many other fossils of comparable early Devonian age bear structures that look like, and acted like, roots.[3] The rhyniophytes bore fine rhizoids, and the trimerophytes and herbaceous lycopods of the chert bore root-like structure penetrating a few centimetres into the soil.[53] However, none of these fossils display all the features borne by modern roots.[3] Roots and root-like structures became increasingly more common and deeper penetrating during the Devonian period, with lycopod trees forming roots around 20 cm long during the Eifelian and Givetian. These were joined by progymnosperms, which rooted up to about a metre deep, during the ensuing Frasnian stage.[53] True gymnosperms and zygopterid ferns also formed shallow rooting systems during the Famennian period.[53]

The rhizomorphs of the lycopods provide a slightly approach to rooting. They were equivalent to stems, with organs equivalent to leaves performing the role of rootlets.[3] A similar construction is observed in the extant lycopod Isoetes, and this appears to be evidence that roots evolved independently at least twice, in the lycophytes and other plants.[3]

A vascular system is indispensable to a rooted plants, as non-photosynthesising roots need a supply of sugars, and a vascular system is required to transport water and nutrients from the roots to the rest of the plant.[4] These plants are little more advanced than their Silurian forbears, without a dedicated root system; however, the flat-lying axes can be clearly seen to have growths similar to the rhizoids of bryophytes today.[54]

By the mid-to-late Devonian, most groups of plants had independently developed a rooting system of some nature.[54] As roots became larger, they could support larger trees, and the soil was weathered to a greater depth.[51] This deeper weathering had effects not only on the aforementioned drawdown of CO2, but also opened up new habitats for colonisation by fungi and animals.[53]

Roots today have developed to the physical limits. They penetrate many metres of soil to tap the water table. The narrowest roots are a mere 40 μm in diameter, and could not physically transport water if they were any narrower.[3] The earliest fossil roots recovered, by contrast, narrowed from 3 mm to under 700 μm in diameter; of course, taphonomy is the ultimate control of what thickness we can see.[3]

Arbuscular mycorrhizae

The efficiency of many plants' roots is increased via a symbiotic relationship with a fungal partner. The most common are arbuscular mycorrhizae (AM), literally "tree-like fungal roots". These comprise fungi which invade some root cells, filling the cell membrane with their hyphae. They feed on the plant's sugars, but return nutrients generated or extracted from the soil (especially phosphate), which the plant would otherwise have no access to.

This symbiosis appears to have evolved early in plant history. AM are found in all plant groups, and 80% of extant vascular plants,[55] suggesting an early ancestry; a "plant"-fungus symbiosis may even have been the step that enabled them to colonise the land,[56] and indeed AM are abundant in the Rhynie chert;[57] the association occurred even before there were true roots to colonise, and is has even been suggested that roots evolved in order to provide a more comfortable habitat for mycorrhizal fungi.[58]

Evolution of seeds

Early land plants reproduced in the fashion of ferns: spores germinated into small gametophytes, which produced sperm. These would swim across moist soils to find the female organs (archegonia) on the same or another gametophyte, where they would fuse with an ovule to produce an embryo, which would germinate into a sporophyte.[53]

This mode of reproduction restricted early plants to damp environments, moist enough that the sperm could swim to their destination. Therefore, early land plants were constrained to the lowlands, near shores and streams. The development of heterospory freed them from this constraint.

Heterosporic organisms, as their name suggests, bear spores of two sizes – microspores and megaspores. These would germinate to form microgametophytes and megagametophytes, respectively. This system paved the way for seeds: taken to the extreme, the megasporangia could bear only a single megaspore tetrad, and to complete the transition to true seeds, three of the megaspores in the original tetrad could be aborted, leaving one megaspore per megasporangium.

The transition to seeds continued with this megaspore being "boxed in" to its sporangium while it germinates. Then, the megagametophyte is contained within a waterproof integument, which forms the bulk of the seed. The microgametophyte – a pollen grain which has germinated from a microspore – is employed for dispersal, only releasing its desiccation-prone sperm when it reaches a receptive megagametophyte.[23]

Lycopods go a fair way down the path to seeds without ever crossing the threshold. Fossil lycopod megaspores reaching 1 cm in diameter, and surrounded by vegetative tissue, are known – these even germinate into a megagametophyte in situ. However, they fall short of being seeds, since the nucellus, an inner spore-covering layer, does not completely enclose the spore. A very small slit remains, meaning that the seed is still exposed to the atmosphere. This has two consequences – firstly, it means it is not fully resistant to desiccation, and secondly, sperm do not have to "burrow" to access the archegonia of the megaspore.[23]

The first "spermatophytes" (literally:seed plants) – that is, the first plants to bear true seeds – are called pteridosperms: literally, "seed ferns", so called because their foliage consisted of fern-like fronds, although they were not closely related to ferns. The oldest fossil evidence of seed plants is of Late Devonian age and they appear to have evolved out of an earlier group known as the progymnosperms. These early seed plants ranged from trees to small, rambling shrubs; like most early progymnosperms, they were woody plants with fern-like foliage. They all bore ovules, but no cones, fruit or similar. While it is difficult to track the early evolution of seeds, we can trace the lineage of the seed ferns from the simple trimerophytes through homosporous Aneurophytes.[23]

This seed model is shared by basically all gymnosperms (literally: "naked seeds"), most of which encase their seeds in a woody or fleshy (the yew, for example) cone, but none of which fully enclose their seeds. The angiosperms ("vessel seeds") are the only group to fully enclose the seed, in a carpel.

Fully enclosed seeds opened up a new pathway for plants to follow: that of seed dormancy. The embryo, completely isolated from the external atmosphere and hence protected from desiccation, could survive some years of drought before germinating. Gymnosperm seeds from the late Carboniferous have been found to contain embryos, suggesting a lengthy gap between fertilisation and germination.[59] This period is associated with the entry into a greenhouse earth period, with an associated increase in aridity. This suggests that dormancy arose as a response to drier climatic conditions, where it became advantageous to wait for a moist period before germinating.[59] This evolutionary breakthrough appears to have opened a floodgate: previously inhospitable areas, such as dry mountain slopes, could now be tolerated, as were soon covered by trees.[59]

Seeds offered further advantages to their bearers: they increased the success rate of fertilised gametophytes, and because a nutrient store could be "packaged" in with the embryo, the seeds could germinate rapidly in inhospitable environments, reaching a size where it could fend for itself more quickly.[53] For example, without an endosperm, seedlings growing in arid environments would not have the reserves to grow roots deep enough to reach the water table before they expired.[53] Likewise, seeds germinating in a gloomy understory require an additional reserve of energy to quickly grow high enough to capture sufficient light for self-sustenance.[53] A combination of these advantages gave seed plants the ecological edge over the previously dominant genus Archaeopteris, this increasing the biodiversity of early forests.[53]

Evolution of flowers

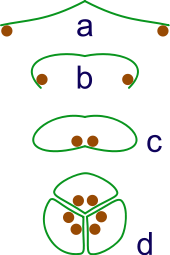

a: sporangia borne at tips of leaf

b: Leaf curls up to protect sporangia

c: leaf curls to form enclosed roll

d: grouping of three rolls into a syncarp

Flowers are modified leaves possessed only by the group known as the angiosperms, which are relatively late to appear in the fossil record. Colourful and/or pungent structures surround the cones of plants such as cycads and gnetales, making a strict definition of the term "flower" elusive.[47]

The flowering plants have long been assumed to have evolved from within the gymnosperms; according to the traditional morphological view, they are closely allied to the gnetales. However, as noted above, recent molecular evidence is at odds to this hypothesis,[43][44] and further suggests that gnetales are more closely related to some gymnosperm groups than angiosperms,[42] and that extant gymnosperms form a distinct clade to the angiosperms,[43][44][42] the two clades diverging some 300 million years ago.[60]

Phylogeny of anthophytes and gymnosperms, from [61]

|

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Traditional view | Modern view |

|---|

The relationship of stem groups to the angiosperms is of utmost importance in determining the evolution of flowers; stem groups provide an insight into the state of earlier "forks" on the path to the current state. If we identify an unrelated group as a stem group, then we will gain an incorrect image of the lineages' history. The traditional view that flowers arose by modification of a structure similar to that of the gnetales, for example, no longer bears weight in the light of the molecular data.

Convergence increases our chances of misidentifying stem groups. Since the protection of the megagametophyte is evolutionarily desirable, it would be unsurprising if many separate groups stumbled upon protective encasements independently. Distinguishing ancestry in such a situation, especially where we usually only have fossils to go on, is tricky – to say the least.

In flowers, this protection is offered by the carpel, an organ believed to represent an adapted leaf, recruited into a protective role, shielding the ovules. These ovules are further protected by a double-walled integument.

Penetration of these protective layers needs something more that a free-floating microgametophyte. Angiosperms have pollen grains comprising just three cells. One cell is responsible for drilling down through the integuments, and creating a conduit for the two sperm cells to flow down. The megagametophyte has just seven cells; of these, one fuses with a sperm cell, forming the nucleus of the egg itself, and another other joins with the other sperm, and dedicates itself to forming a nutrient-rich endosperm. The other cells take auxiliary roles. This process of "double fertilisation" is unique and common to all angiosperms.

In the fossil record, there are three intriguing groups which bore flower-like structures. The first is the Permian pteridosperm Glossopteris, which already bore recurved leaves resembling carpels. The Triassic Caytonia is more flower-like still, with enclosed ovules – but only a single integument. Further, details of their pollen and stamens set them apart from true flowering plants.

The Bennettitales bore remarkably flower-like organs, protected by whorls of bracts which may have played a similar role to the petals and sepals of true flowers; however, these flower-like structures evolved independently, as the Bennettitales are more closely related to cycads and ginkgos than to the angiosperms.[61]

However, no true flowers are found in any groups save those extant today. Most morphological and molecular analyses place Amborella, the nymphaeales and Austrobaileyaceae in a basal clade dubbed "ANA". This clade appear to have diverged in the early Cretaceous, around 130 million years ago – around the same time as the earliest fossil angiosperm,[62][63] and just after the first angiosperm-like pollen, 136 million years ago.[48] The magnoliids diverged soon after, and a rapid radiation had produced eudicots and monocots by 125 million years ago.[48] By the end of the Cretaceous 65.5 million years ago, over 50% of today's angiosperm orders had evolved, and the clade accounted for 70% of global species.[64] It was around this time that flowering trees became dominant over conifers [65]

The features of the basal "ANA" groups suggest that angiosperms originated in dark, damp, frequently disturbed areas.[66] It appears that the angiosperms remained constrained to such habitats throughout the Cretaceous – occupying the niche of small herbs early in the successional series.[64] This may have restricted their initial significance, but given them the flexibility that accounted for the rapidity of their later diversifications in other habitats.[66]

Advances in metabolism

The most recent major innovation by the plants is the development of the C4 metabolic pathway.

Photosynthesis is not quite as simple as adding water to CO2 to produce sugars and oxygen. A complex chemical pathway is involved, facilitated along the way by a range of enzymes and co-enzymes. The enzyme RuBisCO is responsible for "fixing" CO2 – that is, it attaches it to a carbon-based molecule to form a sugar, which can be used by the plant, releasing an oxygen molecule along the way. However, the enzyme is notoriously inefficient, and just as effectively will also fix oxygen instead of CO2 in a process called photorespiration. This is energetically costly as the plant has to use energy to turn the products of photorepsiration back into a form that can react with CO2.

Concentrating carbon

To work around this inefficiency, C4 plants evolved carbon concentrating mechanisms. These work by increasing the concentration of CO2 around RuBisCO, thereby increasing the amount of photosynthesis and decreasing photorespiration. The process of concentrating CO2 around RuBisCO requires more energy than allowing gases to diffuse, but under certain conditions – i.e. warm temperatures (>25°C), low CO2 concentrations, or high oxygen concentrations – pays off in terms of the decreased loss of sugars through photorespiration.

One, C4 metabolism, employs a so-called Kranz anatomy. This transports CO2 through an outer mesophyll layer, via a range of organic molecules, to the central bundle sheath cells, where the CO2 is released. In this way, CO2 is concentrated near the site of RuBisCO operation. Because RuBisCO is operating in an environment with much more CO2 than it otherwise would be, it performs more efficiently.

A second method, CAM photosynthesis, temporally separates photosynthesis from the action of RuBisCO. RuBisCO only operates during the day, when stomata are sealed and CO2 is provided by the breakdown of the chemical malate. More CO2 is then harvested from the atmosphere when stomata open, during the cool, moist nights, reducing water loss.

Evolutionary record

These two pathways, with the same effect on RuBisCO, evolved a number of times independently – indeed, C4 alone arose in 18 different plant families. The C4 construction is most famously used by a subset of grasses, while CAM is employed by many succulents and cacti. The trait appears to have emerged during the Oligocene, around 25 to 32 million years ago;[67] however, they did not become ecologically significant until the Miocene, -1 million years ago.[68] Remarkably, some charcoalified fossils preserve tissue organised into the Kranz anatomy, with intact bundle sheath cells,[69] allowing the presence C4 metabolism to be identified without doubt at this time. In deducing their distribution and significance, we resort to the use of isotopic markers. C3 plants preferentially use the lighter of two isotopes of carbon in the atmosphere, 12C, which is more readily involved in the chemical pathways involved in its fixation. Because C4 metabolism involves a further chemical step, this effect is accentuated. Plant material can be analysed to deduce the ratio of the heavier 13C to 12C. This ratio is denoted δ13C. C3 plants are on average around 14‰ (parts per thousand) lighter than the atmospheric ratio, while C4 plants are about 28‰ lighter. The δ13C of CAM plants depends on the percentage of carbon fixed at night relative to what is fixed in the day, being closer to C3 plants if they fix most carbon in the day and closer to C4 plants if they fix all their carbon at night.[70]

It's troublesome procuring original fossil material in sufficient quantity to analyse the grass itself, but fortunately we have a good proxy: horses. Horses were globally widespread in the period of interest, and browsed almost exclusively on grasses. There's an old phrase in isotope palæontology, "you are what you eat (plus a little bit)" – this refers to the fact that organisms reflect the isotopic composition of whatever they eat, plus a small adjustment factor. There is a good record of horse teeth throughout the globe, and their δ13C has been measured. The record shows a sharp negative inflection around -1 million years ago, during the Messinian, and this is interpreted as the rise of C4 plants on a global scale.[68]

When is C4 an advantage?

While C4 enhances the efficiency of RuBisCO, the concentration of carbon is highly energy intensive. This means that C4 plants only have an advantage over C3 organisms in certain conditions: namely, high temperatures and low rainfall. C4 plants also need high levels of sunlight in order to thrive.[71] Models suggest that without wildfires removing shade-casting trees and shrubs, there would be no space for C4 plants.[72] But wildfires have occurred for 400 million years – why did C4 take so long to arise, and then appear independently so many times? The Carboniferous period (~300 million years ago) had notoriously high oxygen levels – almost enough to allow spontaneous combustion[73] – and very low CO2, but there is no C4 isotopic signature to be found. And there doesn't seem to be a sudden trigger for the Miocene rise.

During the Micoene, the atmosphere and climate was relatively stable. If anything, CO2 increased gradually from 14 to 9 million years ago before settling down to concentrations similar to the Holocene.[74] This suggests that it did not have a key role in invoking C4 evolution.[67] Grasses themselves (the group which would give rise to the most occurrences of C4) had probably been around for 60 million years or more, so had had plenty of time to evolve C4,[75][76] which in any case is present in a diverse range of groups and thus evolved independently. There is a strong signal of climate change in South Asia;[67] increasing aridity – hence increasing fire frequency and intensity – may have led to an increase in the importance of grasslands.[77] However, this is difficult to reconcile with the North American record.[67] It is possible that the signal is entirely biological, forced by the fire- (and elephant?)-[78] driven acceleration of grass evolution – which, both by increasing weathering and incorporating more carbon into sediments, reduced atmospheric CO2 levels.[78] Finally, there is evidence that the onset of C4 from 9 to 7 million years ago is a biased signal, which only holds true for North America, from where most samples originate; emerging evidence suggests that grasslands evolved to a dominant state at least 15Ma earlier in South America.

Evolutionary trends

The process of evolution works slightly differently in plants than animals. Differences in plant physiology and reproduction mean that while the same evolutionary principles of natural selection apply, the finer nuances of their effect are radically different.

One major difference is the ability of plants to reproduce clonally, and the totipotent nature of their cells, allowing them to reproduce asexually much more easily than most animals. They are also capable of polyploidy – where more than two chromosome sets are inherited from parents. This allows relatively fast bursts of evolution to occur. The long periods of dormancy that seed plants can employ also makes them less vulnerable to extinction, as they can "sit out" the tough periods and wait until more clement times to leap back to life.

The effect of these differences is most profoundly seen during extinction events. These events, which wiped out between 6 and 62% of terrestrial animal families, had "negligible" effect on plant families.[79] However, the ecosystem structure is significantly rearranged, with the abundances and distributions of different groups of plants changing profoundly.[79] These effects are perhaps due to the higher diversity within families, as extinction – which was common at the species level – was very selective. For example, wind-pollinated species survived better than insect-pollinated taxa, and specialised species generally lost out.[79] In general, the surviving taxa were rare before the extinction, suggesting that they were generalists who were poor competitors when times were easy, but prospered when specialised groups went extinct and left ecological niches vacant.[79]

See also

- Plants

- General evolution

- Evolutionary history of life

- Timeline of plant evolution

- Timeline of evolution

- Study of plants

- Paleobotany

- Plant evolutionary developmental biology

- Cryptospores

- Plant interactions

References

- ↑ "The oldest fossils reveal evolution of non-vascular plants by the middle to late Ordovician Period (~450-440 m.y.a.) on the basis of fossil spores" Transition of plants to land

- ↑ Rothwell, G. W., Scheckler, S. E. & Gillespie, W. H. (1989). "Elkinsia gen. nov., a Late Devonian gymnosperm with cupulate ovules." Botanical Gazette, 150: 170-189.

- ↑ 3.00 3.01 3.02 3.03 3.04 3.05 3.06 3.07 3.08 3.09 3.10 3.11 3.12 3.13 3.14 Raven, J.A.; Edwards, D. (2001). "Roots: evolutionary origins and biogeochemical significance" (in active DOI due to publisher error (2008-04-30)). Journal of Experimental Botany 52 (90001): 381–401. doi:10.1093/jexbot/52.suppl_1.381. http://jxb.oxfordjournals.org/cgi/content/full/52/suppl_1/381.

- ↑ 4.0 4.1 P. Kenrick, P.R. Crane (1997) (1997). The origin and early diversification of land plants. A cladistic study. Smithsonian Institution Press, Washington & London. Washington: Smithsonian Inst. Press. ISBN 1-56098-729-4.

- ↑ PMID 11498589 (PubMed)

Citation will be completed automatically in a few minutes. Jump the queue or expand by hand - ↑ Battison, Leila; Brasier, Martin D. (August 2009). "Exceptional Preservation of Early Terrestrial Communities in Lacustrine Phosphate One Billion Years Ago". In Smith, Martin R.; O'Brien, Lorna J.; Caron, Jean-Bernard. International Conference on the Cambrian Explosion (Walcott 2009). Abstract Volume. Toronto, Ontario, Canada: The Burgess Shale Consortium. 31st July 2009. ISBN 978-0-9812885-1-2. http://burgess-shale.info/abstract/brasier-1.

- ↑ doi: 10.1038/nature08213

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ 8.0 8.1 8.2 8.3 8.4 Gray, J.; Chaloner, W. G.; Westoll, T. S. (1985). "The Microfossil Record of Early Land Plants: Advances in Understanding of Early Terrestrialization, 1970-1984". Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences (1934-1990) 309 (1138): 167–195. doi:10.1098/rstb.1985.0077. http://links.jstor.org/sici?sici=0080-4622(19850402)309%3A1138%3C167%3ATMROEL%3E2.0.CO%3B2-E. Retrieved 2008-04-26.

- ↑ doi:10.1098/rstb.2000.0612

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ Wellman, C.H.; Osterloff, P.L.; Mohiuddin, U. (2003). "Fragments of the earliest land plants". Nature 425 (6955): 282–285. doi:10.1038/nature01884. PMID 13679913.

- ↑ doi:10.1130/G21295.1

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ doi:10.1111/j.1472-4669.2009.00188.x

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ doi: 10.1126/science.1169659

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ doi:10.1130/2006.2399(02)

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ doi:10.1073/pnas.0604090103

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ 16.0 16.1 16.2 16.3 Stewart, W.N. and Rothwell, G.W. 1993. Paleobotany and the evolution of plants, Second edition. Cambridge University Press, Cambridge, UK. ISBN 0-521-38294-7

- ↑ 17.0 17.1 17.2 17.3 C. Kevin Boyce (2008). "How green was Cooksonia? The importance of size in understanding the early evolution of physiology in the vascular plant lineage". Paleobiology 34: 179. doi:10.1666/0094-8373(2008)034[0179:HGWCTI]2.0.CO;2.

- ↑ 18.00 18.01 18.02 18.03 18.04 18.05 18.06 18.07 18.08 18.09 18.10 18.11 18.12 18.13 18.14 18.15 18.16 18.17 18.18 18.19 18.20 18.21 18.22 18.23 18.24 18.25 18.26 18.27 18.28 18.29 18.30 18.31 18.32 18.33 JSTOR 3691719

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ JSTOR 2400461

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ 20.0 20.1 20.2 20.3 20.4 20.5 20.6 20.7 JSTOR 2408738

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ PMID 17747811 (PubMed)

Citation will be completed automatically in a few minutes. Jump the queue or expand by hand - ↑ Qiu, Y.L.; Li, L.; Wang, B.; Chen, Z.; Knoop, V.; Groth-malonek, M.; Dombrovska, O.; Lee, J.; Kent, L.; Rest, J.; Others, (2006). "The deepest divergences in land plants inferred from phylogenomic evidence". Proceedings of the National Academy of Sciences 103 (42): 15511. doi:10.1073/pnas.0603335103. PMID 17030812.

- ↑ 23.0 23.1 23.2 23.3 23.4 23.5 23.6 23.7 23.8 Stewart, W.N.; Rothwell, G.W. (1993). Paleobiology and the evolution of plants. Cambridge University Press. pp. 521pp.

- ↑ Zosterophyllophytes

- ↑ Rickards, R.B. (2000). "The age of the earliest club mosses: the Silurian Baragwanathia flora in Victoria, Australia" (abstract). Geological Magazine 137 (2): 207–209. doi:10.1017/S0016756800003800. http://geolmag.geoscienceworld.org/cgi/content/abstract/137/2/207. Retrieved 2007-10-25.

- ↑ 26.0 26.1 26.2 26.3 26.4 Kaplan, D.R. (2001). "The Science of Plant Morphology: Definition, History, and Role in Modern Biology". American Journal of Botany 88 (10): 1711–1741. doi:10.2307/3558347. http://links.jstor.org/sici?sici=0002-9122(200110)88%3A10%3C1711%3ATSOPMD%3E2.0.CO%3B2-T. Retrieved 2008-01-31.

- ↑ Taylor, T.N.; Hass, H.; Kerp, H.; Krings, M.; Hanlin, R.T. (2005). "Perithecial ascomycetes from the 400 million year old Rhynie chert: an example of ancestral polymorphism" (abstract). Mycologia 97 (1): 269–285. doi:10.3852/mycologia.97.1.269. PMID 16389979. http://www.mycologia.org/cgi/content/abstract/97/1/269. Retrieved 2008-04-07.

- ↑ 28.0 28.1 28.2 Boyce, C.K.; Knoll, A.H. (2002). "Evolution of developmental potential and the multiple independent origins of leaves in Paleozoic vascular plants". Paleobiology 28 (1): 70–100. doi:10.1666/0094-8373(2002)028<0070:EODPAT>2.0.CO;2.

- ↑ Hao, S.; Beck, C.B.; Deming, W. (2003). "Structure of the Earliest Leaves: Adaptations to High Concentrations of Atmospheric CO2". International Journal of Plant Sciences 164 (1): 71–75. doi:10.1086/344557.

- ↑ Berner, R.A.; Kothavala, Z. (2001). "Geocarb III: A Revised Model of Atmospheric CO2 over Phanerozoic Time" (abstract). American Journal of Science 301 (2): 182. doi:10.2475/ajs.301.2.182. http://ajsonline.org/cgi/content/abstract/301/2/182. Retrieved 2008-04-07.

- ↑ Beerling, D.J.; Osborne, C.P.; Chaloner, W.G. (2001). "Evolution of leaf-form in land plants linked to atmospheric CO2 decline in the Late Palaeozoic era". Nature 410 (6826): 287–394. doi:10.1038/35066546. PMID 11268207.

- ↑ Taylor, T.N.; Taylor, E.L. (1993). The biology and evolution of fossil plants.

- ↑ Shellito, C.J.; Sloan, L.C. (2006). "Reconstructing a lost Eocene paradise: Part I. Simulating the change in global floral distribution at the initial Eocene thermal maximum". Global and Planetary Change 50 (1-2): 1–17. doi:10.1016/j.gloplacha.2005.08.001. http://linkinghub.elsevier.com/retrieve/pii/S0921818105001475. Retrieved 2008-04-08.

- ↑ Aerts, R. (1995). "The advantages of being evergreen". Trends in Ecology & Evolution 10 (10): 402–407. doi:10.1016/S0169-5347(00)89156-9.

- ↑ Labandeira, C.C.; Dilcher, D.L.; Davis, D.R.; Wagner, D.L. (1994). "Ninety-seven million years of angiosperm-insect association: paleobiological insights into the meaning of coevolution". Proceedings of the National Academy of Sciences of the United States of America 91 (25): 12278–12282. doi:10.1073/pnas.91.25.12278. PMID 11607501.

- ↑ Boyce, K.C.; Hotton, C.L.; Fogel, M.L.; Cody, G.D.; Hazen, R.M.; Knoll, A.H.; Hueber, F.M. (May 2007). "Devonian landscape heterogeneity recorded by a giant fungus" (PDF). Geology 35 (5): 399–402. doi:10.1130/G23384A.1. http://geology.geoscienceworld.org/cgi/reprint/35/5/399.pdf.

- ↑ Stein, W.E.; Mannolini, F.; Hernick, L.V.; Landing, E.; Berry, C.M. (2007). "Giant cladoxylopsid trees resolve the enigma of the Earth's earliest forest stumps at Gilboa.". Nature 446 (7138): 904–7. doi:10.1038/nature05705. PMID 17443185.

- ↑ Retallack, G.J.; Catt, J.A.; Chaloner, W.G. (1985). "Fossil Soils as Grounds for Interpreting the Advent of Large Plants and Animals on Land [and Discussion"]. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences 309 (1138): 105–142. doi:10.1098/rstb.1985.0074. http://links.jstor.org/sici?sici=0080-4622(19850402)309%3A1138%3C105%3AFSAGFI%3E2.0.CO%3B2-5. Retrieved 2008-04-07.

- ↑ Dannenhoffer, J.M.; Bonamo, P.M. (1989). "Rellimia thomsonii from the Givetian of New York: Secondary Growth in Three Orders of Branching". American Journal of Botany 76 (9): 1312–1325. doi:10.2307/2444557. http://links.jstor.org/sici?sici=0002-9122(198909)76:9%3C1312:RTFTGO%3E2.0.CO;2-S. Retrieved 2008-04-07.

- ↑ Davis, P; Kenrick, P. (2004). Fossil Plants. Smithsonian Books, Washington D.C.. ISBN 1588341569.

- ↑ Donoghue, M.J. (2005). "Key innovations, convergence, and success: macroevolutionary lessons from plant phylogeny" (abstract). Paleobiology 31 (2): 77–93. doi:10.1666/0094-8373(2005)031[0077:KICASM]2.0.CO;2. http://paleobiol.geoscienceworld.org/cgi/content/abstract/31/2_Suppl/77. Retrieved 2008-04-07.

- ↑ 42.0 42.1 42.2 Bowe, L.M.; Coat, G.; Depamphilis, C.W. (2000). "Phylogeny of seed plants based on all three genomic compartments: Extant gymnosperms are monophyletic and Gnetales' closest relatives are conifers". Proceedings of the National Academy of Sciences 97 (8): 4092. doi:10.1073/pnas.97.8.4092.

- ↑ 43.0 43.1 43.2 Chaw, S.M.; Parkinson, C.L.; Cheng, Y.; Vincent, T.M.; Palmer, J.D. (2000). "Seed plant phylogeny inferred from all three plant genomes: Monophyly of extant gymnosperms and origin of Gnetales from conifers". Proceedings of the National Academy of Sciences 97 (8): 4086. doi:10.1073/pnas.97.8.4086.

- ↑ 44.0 44.1 44.2 Soltis, D.E.; Soltis, P.S.; Zanis, M.J. (2002). "Phylogeny of seed plants based on evidence from eight genes" (abstract). American Journal of Botany 89 (10): 1670. doi:10.3732/ajb.89.10.1670. http://amjbot.org/cgi/content/abstract/89/10/1670. Retrieved 2008-04-08.

- ↑ Friis, E.M.; Pedersen, K.R.; Crane, P.R. (2006). "Cretaceous angiosperm flowers: Innovation and evolution in plant reproduction". Palaeogeography, Palaeoclimatology, Palaeoecology 232 (2-4): 251–293. doi:10.1016/j.palaeo.2005.07.006.

- ↑ Hilton, J.; Bateman, R.M. (2006). "Pteridosperms are the backbone of seed-plant phylogeny". The Journal of the Torrey Botanical Society 133 (1): 119–168. doi:10.3159/1095-5674(2006)133[119:PATBOS]2.0.CO;2.

- ↑ 47.0 47.1 Bateman, R.M.; Hilton, J.; Rudall, P.J. (2006). "Morphological and molecular phylogenetic context of the angiosperms: contrasting the 'top-down' and 'bottom-up' approaches used to infer the likely characteristics of the first flowers". Journal of Experimental Botany 57 (13): 3471. doi:10.1093/jxb/erl128. PMID 17056677.

- ↑ 48.0 48.1 48.2 48.3 Frohlich, M.W.; Chase, M.W. (2007). "After a dozen years of progress the origin of angiosperms is still a great mystery.". Nature 450 (7173): 1184–9. doi:10.1038/nature06393. PMID 18097399.

- ↑ Mora, C.I.; Driese, S.G.; Colarusso, L.A. (1996). "Middle to Late Paleozoic Atmospheric CO2 Levels from Soil Carbonate and Organic Matter". Science 271 (5252): 1105–1107. doi:10.1126/science.271.5252.1105.

- ↑ Berner, R.A. (1994). "GEOCARB II: A revised model of atmospheric CO2 over Phanerozoic time". Am. J. Sci 294: 56–91. doi:10.2475/ajs.294.1.56.

- ↑ 51.0 51.1 Algeo, T.J.; Berner, R.A.; Maynard, J.B.; Scheckler, S.E.; Archives, G.S.A.T. (1995). "Late Devonian Oceanic Anoxic Events and Biotic Crises: "Rooted" in the Evolution of Vascular Land Plants?". GSA Today 5 (3). https://rock.geosociety.org/pubs/gsatoday/gsat9503.htm.

- ↑ Retallack, G. J. (1986). Wright, V. P.. ed. Paleosols: their Recognition and Interpretation. Oxford: Blackwell.

- ↑ 53.0 53.1 53.2 53.3 53.4 53.5 53.6 53.7 53.8 Algeo, T.J.; Scheckler, S.E. (1998). "Terrestrial-marine teleconnections in the Devonian: links between the evolution of land plants, weathering processes, and marine anoxic events". Philosophical Transactions of the Royal Society B: Biological Sciences 353 (1365): 113–130. doi:10.1098/rstb.1998.0195.

- ↑ 54.0 54.1 Kenrick, P.; Crane, P.R. (1997). "The origin and early evolution of plants on land". Nature 389 (6646): 33. doi:10.1038/37918.

- ↑ Schüßler, A. et al. (2001). "A new fungal phlyum, the Glomeromycota: phylogeny and evolution.". Mycol. Res. 105 (12): 1416. doi:10.1017/S0953756201005196. http://journals.cambridge.org/action/displayAbstract?fromPage=online&aid=95091.

- ↑ Simon, L., Bousquet, J., Levesque, C., Lalonde, M. (1993). "Origin and diversification of endomycorrhizal fungi and coincidence with vascular land plants". Nature 363: 67–69. doi:10.1038/363067a0.

- ↑ Remy, W., Taylor, T., Hass, H., Kerp, H. (1994). "Four hundred-million-year-old vesicular arbuscular mycorrhizae". Proceedings of the National Academy of Science USA 91: 11841–11843. doi:10.1073/pnas.91.25.11841.

- ↑ * Brundrett, M.C. (2002). "Coevolution of roots and mycorrhizas of land plants". New Phytologist 154 (2): 275–304. doi:10.1046/j.1469-8137.2002.00397.x.

- ↑ 59.0 59.1 59.2 Mapes, G.; Rothwell, G.W.; Haworth, M.T. (1989). "Evolution of seed dormancy". Nature 337 (6208): 645–646. doi:10.1038/337645a0.

- ↑ Nam, J.; Depamphilis, CW; Ma, H; Nei, M (2003). "Antiquity and Evolution of the MADS-Box Gene Family Controlling Flower Development in Plants". Mol. Biol. Evol. 20 (9): 1435–1447. doi:10.1093/molbev/msg152. PMID 12777513. http://mbe.oxfordjournals.org/cgi/content/full/20/9/1435.

- ↑ 61.0 61.1 Crepet, W. L. (2000). "Progress in understanding angiosperm history, success, and relationships: Darwin's abominably "perplexing phenomenon"". Proceedings of the National Academy of Sciences 97: 12939. doi:10.1073/pnas.97.24.12939. PMID 11087846. http://www.pnas.org/cgi/reprint/97/24/12939.

- ↑ Sun, G.; Ji, Q.; Dilcher, D.L.; Zheng, S.; Nixon, K.C.; Wang, X. (2002). "Archaefructaceae, a New Basal Angiosperm Family". Science 296 (5569): 899. doi:10.1126/science.1069439. PMID 11988572.

- ↑ In fact, Archaeofructus probably didn't bear true flowers: see

- Friis, E.M.; Doyle, J.A.; Endress, P.K.; Leng, Q. (2003). "Archaefructus--angiosperm precursor or specialized early angiosperm?". Trends in Plant Science 8 (8): 369–373. doi:10.1016/S1360-1385(03)00161-4. PMID 12927969.

- ↑ 64.0 64.1 Wing, S.L.; Boucher, L.D. (1998). "Ecological Aspects Of The Cretaceous Flowering Plant Radiation". Annual Reviews in Earth and Planetary Sciences 26 (1): 379–421. doi:10.1146/annurev.earth.26.1.379.

- ↑ Wilson Nichols Stewart & Gar W. Rothwell, Paleobotany and the evolution of plants, 2nd ed., Cambridge Univ. Press 1993, p. 498

- ↑ 66.0 66.1 Feild, T.S.; Arens, N.C.; Doyle, J.A.; Dawson, T.E.; Donoghue, M.J. (2004). "Dark and disturbed: a new image of early angiosperm ecology" (abstract). Paleobiology 30 (1): 82–107. doi:10.1666/0094-8373(2004)030<0082:DADANI>2.0.CO;2. http://paleobiol.geoscienceworld.org/cgi/content/abstract/30/1/82. Retrieved 2008-04-08.

- ↑ 67.0 67.1 67.2 67.3 Osborne, C.P.; Beerling, D.J. (2006). "Review. Nature's green revolution: the remarkable evolutionary rise of C4 plants". Philosophical Transactions: Biological Sciences 361 (1465): 173–194. doi:10.1098/rstb.2005.1737. PMID 16553316. PMC 1626541. http://www.journals.royalsoc.ac.uk/index/YTH8204514044972.pdf. Retrieved 2008-02-11.

- ↑ 68.0 68.1 JSTOR 3515337

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ Thomasson, J.R.; Nelson, M.E.; Zakrzewski, R.J. (1986). "A Fossil Grass (Gramineae: Chloridoideae) from the Miocene with Kranz Anatomy". Science 233 (4766): 876–878. doi:10.1126/science.233.4766.876. PMID 17752216.

- ↑ O'Leary, Marion (May, 1988). "Carbon Isotopes in Photosynthesis". BioScience 38 (5): 328–336. doi:10.2307/1310735. http://www.jstor.org/pss/1310735.

- ↑ doi:10.1098/rspb.2008.1762

This citation will be automatically completed in the next few minutes. You can jump the queue or expand by hand - ↑ Bond, W.J.; Woodward, F.I.; Midgley, G.F. (2005). "The global distribution of ecosystems in a world without fire". New Phytologist 165 (2): 525–538. doi:10.1111/j.1469-8137.2004.01252.x. PMID 15720663.

- ↑ Above 35% atmospheric oxygen, the spread of fire is unstoppable. Many models have predicted higher values and had to be revised, because there was not a total extinction of plant life.

- ↑ Pagani, M.; Zachos, J.C.; Freeman, K.H.; Tipple, B.; Bohaty, S. (2005). "Marked Decline in Atmospheric Carbon Dioxide Concentrations During the Paleogene". Science 309 (5734): 600–603. doi:10.1126/science.1110063. PMID 15961630.

- ↑ Piperno, D.R.; Sues, H.D. (2005). "Dinosaurs Dined on Grass". Science 310 (5751): 1126. doi:10.1126/science.1121020. PMID 16293745.

- ↑ Prasad, V.; Stroemberg, C.A.E.; Alimohammadian, H.; Sahni, A. (2005). "Dinosaur Coprolites and the Early Evolution of Grasses and Grazers". Science(Washington) 310 (5751): 1177–1180. doi:10.1126/science.1118806. PMID 16293759.

- ↑ Keeley, J.E.; Rundel, P.W. (2005). "Fire and the Miocene expansion of C4 grasslands". Ecology Letters 8 (7): 683–690. doi:10.1111/j.1461-0248.2005.00767.x.

- ↑ 78.0 78.1 Retallack, G.J. (2001). "Cenozoic Expansion of Grasslands and Climatic Cooling". The Journal of Geology 109 (4): 407–426. doi:10.1086/320791.

- ↑ 79.0 79.1 79.2 79.3 McElwain, J.C.; Punyasena, S.W. (2007). "Mass extinction events and the plant fossil record". Trends in Ecology & Evolution 22 (10): 548–557. doi:10.1016/j.tree.2007.09.003. PMID 17919771.

|

||||||||||||||||||||||||||||||||